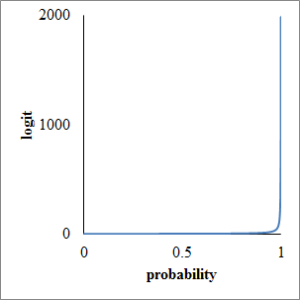

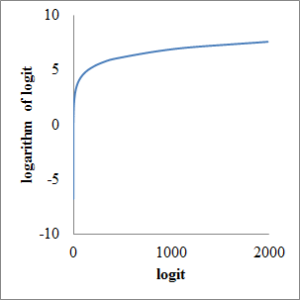

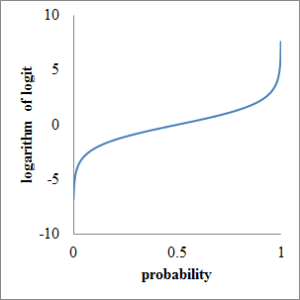

In this article, I’d like to describe how to calculate appropriate sample size in Cox proportional analysis with cross tabulation, a error and b error. a error is called as statistical significance or type 1 error and b error is called as type 2 error, respectively. 1 – b is called as statistical power. a is usually configured at 0.05 (two-tailed) and b is configured at 0.2 (one-sided), respectively. As a result, Za/2 is 1.96 and Zb is 0.84, respectively.

I’d like to assume that S1 is survival rate of risk group or intervention group and S0 is survival rate of control group, without risk or intervention. q is ratio of logarithm of them.

I’d like to use cross tabulation here. You can replace endpoint with death or failure.

| ENDPOINT | CENSOR | Marginal total | |

| POSITIVE | a | b | a + b |

| NEGATIVE | c | d | c + d |

| Marginal total | a + c | b + d | N |

You can calculate estimated number of death (e) in both group as following formula by Freedman’s approximate calculation.

You can calculate entry size (n) in each group, as following formula.

You have to correct entry size with drop-out rate (w) as following formula. Throughout trial, two times of n is needed.