The scalar or dot product of two vectors

and

is

and the vectors are perpendicular or orthogonal if

. From the point of view of matrices we can consider these vectors as column vectors

from which it follows that

.

This leads us to define the scalar product of real column vectors

and

B asand to define

and

to be orthogonal if

.

It is convenient to generalize this to cases where the vectors can have complex components and we adopt the following definition:

Definition 1. Two column vectors

and

are called orthogonal if

, and

is called the scalar product of

and

.

It should be noted also that if

is a unitary matrix then

, which means that the scalar product of

with itself is 1 or equivalently

is a unit vector, i.e. having length 1. Thus a unitary column vector is a unit vector. Because of these remarks we have the following

Definition 2. A set of vectors

for which

is called a unitary set or system of vectors or, in the case where the vectors are real, an orthonormal set or an orthogonal set of unit vectors.

カテゴリー: Mathematics

統計の基本となる数学に関する記事.Basic mathematics for statistics.

直交ベクトル

二つのベクトル および

のスカラー積またはドット積は

であり,

ならばそれらのベクトルは垂直または直交します.行列の観点からこれらのベクトルは列ベクトルと考えることができます.

これらは という性質があります.

これにより 実数の列ベクトル および

と定義し,

なら

および

は 直交 するとの定義に至ります.

これらを複素数を要素に持つ場合に一般化し,次の定義を採用するのは便利です.

定義 1. 二つの列ベクトル および

は

なら 直交 と呼び,

は

および

の スカラー積 と呼びます.

仮に がユニタリ行列なら

であることに注意が必要です.それは

とそれ自身とのスカラー積が 1 であり,

が 単位ベクトル であることすなわち長さが 1 であることと等価です.ゆえにユニタリ列ベクトルは単位ベクトルです.これらの特徴から以下を得ます.

定義 2. ベクトルの集合 について

を unitary set or system of vectors と呼び,あるいはベクトルが実数の場合には 正規直交の集合 または 単位ベクトルの直交の集合 と呼びます.

Orthogonal and unitary matrices

A real matrix

is called an orthogonal matrix if its transpose is the same as its inverse, i.e. if

or

.

A complex matrix

is called a unitary matrix if its complex conjugate transpose is the same as its inverse, i.e. if

or

. It should be noted that a real unitary matrix is an orthogonal matrix.

直交行列とユニタリ行列

実行列 はその転置行列が自身の逆行列と同じ場合,すなわち仮に

または

ならば 直交行列 と呼びます.

複素行列 は自身の複素共軛転置行列が逆行列と同じなら,すなわち仮に

または

ならば ユニタリ行列 と呼びます.実ユニタリ行列は直交行列であることに注意が必要です.

Inverse of a matrix

If for a given square matrix

there exists a matrix

such that

, then

is called an inverse of

and is denoted by

. The following theorem is fundamental.

11. If

is a non-singular square matrix of order n [i.e.

], then there exists a unique inverse

such that

and we can express

in the following form

where

is the matrix of cofactors

and

is its transpose.

The following express some properties of the inverse:

逆行列

仮にある正方行列 があって,

のような性質を有する

が存在するなら

は

の 逆行列 と呼ばれ

と記述します.下記の定理が成り立ちます.

11. 仮に が n 次の非特異的正方行列の場合,すなわち

の時,唯一のの逆行列

が存在し,

であって

を次の形で表現できます.

ここで は余因子

の行列であって

はその転置行列です.

以下は逆行列のいくつかの性質を示しています.

Theorems on determinants

- The value of a determinant remains the same if rows and columns are interchanged. In symbols,

.

- If all elements of any row [or column] are zero except for one element, then the value of the determinant is equal to the product of that element by its cofactor. In particular, if all elements of a row [or column] are zero the determinant is zero.

- An interchange of any two rows [or columns] changes the sign of the determinant.

- If all elements in any row [or column] are multiplied by a number, the determinant is also multiplied by this number.

- If any two rows [or columns] are the same or proportional, the determinant is zero.

- If we express the elements of each row [or column] as the sum of two terms, then the determinant can be expressed as the sum of two determinants having the same order.

- If we multiply the elements of any row [or column] by a given number and add to corresponding elements of any other row [or column], then the value of the determinant remains the same.

- If

and

are square matrices of the same order, then

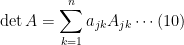

- The sum of the products of the elements of any row [or column] by the cofactors of another row [or column] is zero. In symbols,

or

if

If

, the sum is

by (10).

- Let

represent row vectors [or column vectors] of a square matrix

of order n. Then

if and only if there exist constants [scalars]

not all zero such that

where O is the null or zero row matrix. If condition (13) is satisfied we say that the vectors

are linearly dependent. A matrix

such that

is called a singular matrix. If

, then

is a non-singular matrix.

In practice we evaluate a determinant of order n by using Theorem 7 successively to replace all but one of the elements in a row or column by zeros and then using Theorem 2 to obtain a new determinant of order n – 1. We continue in this manner, arriving ultimately at determinants of order 2 or 3 which are easily evaluated.

行列式の定理

- 行列式の値は,行と列が入れ替わっても変化しません.記法では

.

- 任意の行または列の1つを除く全要素がゼロならば行列式の値は,そのゼロでない要素の余因子の積に等しくなります.特に,ある行または列の全要素がゼロならば行列式もゼロになります.

- 任意の 2 行または 2 列を交換すると行列式の符号が変化します.

- 任意の行または列の全要素にある数をかけると,その行列式もその数でかけられたものになります.

- 任意の 2 行または 2 列が同じか比例するならその行列式はゼロになります.

- 各行または各列の要素を 2 項で表現できるなら,その行列式は同次の二つの行列式の和で表現できます.

- 任意の行または列の要素にある数をかけ,任意の他の行または列の対応する要素に足していくと,その行列式の値は同じになります.

- 仮に

および

が同次の正方行列なら

- 他の行または列の余因子による任意の行または列の要素の積和はゼロとなります.記法では

or

if

仮に

なら

の和は (10) によります.

- ここで

が n 次正方行列

の行ベクトルまたは列ベクトルを表すとします.すると

となるのはすべてゼロではない以下の条件を満たす定数またはスカラー

が存在するときのみです.

ここで O はヌル行列または零行列です.仮に条件式 (13) が満たされるならベクトル

は 線形従属 であると示すことができます.ある行列

が

を満たすなら 特異行列 と呼びます.仮に

であるなら

は 非特異行列 です.

実際には,定理 7 によりある行または列の一つを除いた全要素を 0 で置換し,更に定理 2 を用いて n – 1 次の新しい行列式を得ることで n 次の行列式を評価できます.この方法を続けることで,最終的に 2 次または 3 次の行列式に到達するため,評価は容易です.

Determinants

If the matrix

in (1) is a square matrix, then we associate with

a number denoted by

called the determinant of

of order n, written det(A). In order to define the value of a determinant, we introduce the following concepts.

1. Minor

Given any element

of

we associate a new determinant of order (n – 1) obtained by removing all elements of the jth row and kth column called the minor of

.

2. Cofactor

If we multiply the minor of

by

, the result of the elements in any row [or column] by their corresponding cofactors and is called the Laplace expansion. In symbols,

We can show that this value is independent of the row [or column] used.

行列式

仮に (1) における行列 が正方行列なら,

に対して下記に示すある数を関連付けられます.

これは n次 の の 行列式 と呼び, det(A) と記述します.行列式の値を定義するために次の概念を導入しましょう.

1. 小行列式

の任意の要素

がある時, j 番目の行および k 番目の列の全要素を除去して得られた (n – 1) 次の新しい行列式を関連付け,これを

の小行列式と呼びます.

2. 余因子

仮に の小行列式に

を乗算するなら,それらの対応する余因子による任意の行(または列)における要素の結果は ラプラス展開 と呼びます.記法では

この値は用いられる行または列によらず独立です.

Some special definitions and operations involving matrices

1. Equality of Matrices

Two matrices

and

of the same order [i.e. equal numbers of rows and columns] are equal if and only if

.

2. Addition of Matrices

If

and

have the same order we define the sum of

and

as

.

Note that the communicative and associative laws for addition are satisfied by matrices, i.e. for any matrices

of the same order

3. Subtraction of Matrices

If

,

have the same order, we define the difference of

and

as

.

4. Multiplication of a Matrix by a Number

If

and

is any number or scalar, we define the product of

by

as

.

5. Multiplication of Matrices

If

is an

matrix while

is an

matrix, then we define the product

or

as the matrix

where

and where

is of order

.

Note that in general

, i.e. the communicative law for multiplication of matrices is not satisfied in general. However, the associative and distributive laws are satisfied, i.e.

A matrix

can be multiplied by itself if and only if it is a square matrix. The product

can in such case be written

. Similarly we define powers of a square matrix, i.e.

, etc.

6. Transpose of a Matrix

If we interchange rows and columns of a matrix

, the resulting matrix is called the transpose of

and is denoted by

. In symbols, if

then

.

We can prove that

7. Symmetric and Skew-Symmetric matrices

A square matrix

is called symmetric if

and skew-symmetric if

.

Any real square matrix [i.e. one having only real elements] can always be expressed as the sum of a real symmetric matrix and a real skew-symmetric matrix.

8. Complex Conjugate of a Matrix

If all elements

of a matrix

are replaced by their complex conjugates

, the matrix obtained is called the complex conjugate of

and is denoted by

.

9. Hermitian and Skew-Hermitian Matrices

A square matrix

which is the same as the complex conjugate of its transpose, i.e. if

, is called Hermitian. If

, then

is called skew-Hermitian. If

is real these reduce to symmetric and skew-symmetric matrices respectively.

10. Principal Diagonal and Trace of a Matrix

If

is a square matrix, then the diagonal which contains all elements

for which

is called the principal or main diagonal and the sum of all elements is called trace of

.

A matrix for which

when

is called diagonal matrix.

11. Unit Matrix

A square matrix in which all elements of the principal diagonal are equal to 1 while all other elements are zero is called the unit matrix and is denoted by

. An important property of

is that

The unit matrix plays a role in matrix algebra similar to that played by the number one in ordinary algebra.

12. Zero or Null matrix

A matrix whose elements are all equal to zero is called the null or zero matrix and is often denoted by

or symply 0. For any matrix

having the same order as 0 we have

Also if

and 0 are square matrices, then

The zero matrix plays a role in matrix algebra similar to that played by the number zero of ordinary algebra.

いくつかの行列を含む特殊な定義と演算

1. 行列が等しい

二つの行列 および

が同じ次数で(すなわち行と列の数が同じで)

の時にのみ 等しい.

2. 行列の和

仮に および

が同じ次数ならば

および

の 和 を

と定義できます.

行列の交換法則と結合法則は,すなわちある同じ次数の行列 を下記のように記述します.

3. 行列の差

仮に ,

が同じ次数を有するなら

および

の 差 を

と定義できます.

4. 行列のスカラー倍

仮に があって

が任意の数またはスカラーの時

の

による 積 を

と定義できます.

5. 行列の積

仮に が

行列で

が

行列の時,

または

の 積 を行列

と定義できます.ここで

また は

次です.

一般に すなわち行列の積の交換法則は成り立たないことに注意してください.しかしながら結合法則と分配法則は成り立ちます,すなわち

ある行列 がそれ自身との積をつくれるのは正方行列の場合のみです.積

は

と記述します.同様に行列の累乗を定義できます.すなわち

などです.

6. 行列の転置

行列 の行と列を入れ替えることができるなら,その結果得られる行列は

の 転置 と呼び,

と記述します.記号では,仮に

ならば

と記述します.

以下を証明できます.

7. 対称行列と歪対称行列

ある正方行列 は

の時 対称 と呼び,

の時 歪対称 と呼びます.

任意の実正方行列(すなわち実数の要素のみからなる実正方行列)は常に実対称行列と実歪対称行列の和で表現できます.

8. 行列の複素共役

仮に行列 のすべての要素

が複素共役

で置き換えられたら,その結果得られた行列は

の 複素共役 と呼び,

と記述します.

9. エルミート行列及び歪エルミート行列

ある行列 がそれ自身の転置の複素共役に等しい時,すなわち

であるなら エルミート行列 と呼びます.仮に

の場合は

は 歪エルミート行列 と呼びます.仮に

が実行列ならこれらは対称行列および歪対称行列にそれぞれ短縮されます.

10. 主対角線と行列のトレース

仮に を正方行列とすると対角線上のすべての要素

について

であるものを principal あるいは 主対角線 と呼び,主対角線上の全要素の和を

の トレース と呼びます.

ある行列の なる要素について

の時その行列を 対角行列 と呼びます.

11. 単位行列

ある正方行列について主対角線上の全要素が 1 に等しく他の要素が全てゼロに等しい時 単位行列 と呼び, と記述します.

の属性については非常に重要です.

単位ベクトルは行列代数において,普通の代数における数 1 と同じ役割を果たします.

12. 零行列またはヌル行列

ある行列についてその要素が全てゼロに等しいなら ヌル行列 または 零行列 と呼び, または単に 0 と記述します.すべての行列

について同次数の 0 を考えると,

また仮に および 0 が正方行列なら

零行列は行列代数においては通常の対数における数 0 と同じ役割を果たします.

Definition of a matrix

A matrix of order m × n, or m by n matrix, is a rectangular array of numbers having m rows and n columns. It can be written in the form

Each number

in this matrix is called an element. The subscripts

and

indicate respectively the row and column of the matrix in which the element appears.

We shall often denote a matrix by a letter, such as

in (1), or by the symbol

which shows a representative element.

A matrix having only one row is called a row matrix or row vector while a matrix having only one column is called a column matrix or column vector. If the number of rows

and columns

are equal the matrix is called a square matrix of order

or briefly

. A matrix is said to be a real matrix or complex matrix according as its elements are real or complex numbers.

行列の定義

m × n 行列 または mn 行列 は m 行および n 列を有する長方形の配列です.下記の形式で記述できます.

この配列内のそれぞれの数 を 要素 と呼びます.添字の

および

は,要素の出現する行列における行と列をそれぞれ示しています.

行列はしばしば (1) における のような 1 文字や,代表的な要素を示す記号

で記述します.

ただ 1 行からなる行列を row matrix または 行ベクトル と呼び,ただ 1 列からなる行列を column matrix または 列ベクトル と呼びます.仮に行数 と列数が

が等しいならその行列を次数

または単に

次の 正方行列 と呼びます.行列はその要素が実数か複素数かによって 実行列 または 複素行列 と呼びます.

Special curvilinear coordinates

1. Cylindrical coordinates

Transformation equations:

where

,

,

Element of arc length:

Jacobian:

Element of volume:

Laplacian:

Note that corresponding results can be obtained for polar coordinates in the plane by omitting

dependence. In such case for example,

, while the element of volume is replaced by the element of area,

.

2. Spherical coordinates

Transformation equations:

where

.

Scale factors:

Element of arc length:

Jacobian:

Element of volume:

Laplacian:

Other types of coordinate systems are possible.

特殊な曲線座標

1. 円柱座標系

変換式:

ここで ,

,

弧長要素:

ヤコビアン:

体積要素:

ラプラシアン:

依存性を省略した平面内での極座標系で対応する結果が得られることに注意してください.そのような場合,例えば

ここで体積要素は面積要素

で置換されます.

2. 球面座標系

変換式:

ここで .

スケール因子:

弧長要素:

ヤコビアン:

体積要素:

ラプラシアン:

他の種類の座標系も可能です.

Gradient, divergence, curl and Laplacian in orthogonal curvilinear coordinates

If

is a scalar function and

a vector function of orthogonal curvilinear coordinates

,

,

, we have the following results.

1.

2.

3.

4.

These reduce to the usual expressions in rectangular coordinates if we replace

by

, in which case

,

and

are replaced by

,

and

and

.

直交曲線座標における勾配,発散,回転およびラプラシアン

仮に が一つのスカラー関数であり,また

が直交曲線座標

,

,

のベクトル関数の時,下記の結果を得ます.

1.

2. ![\displaystyle \nabla\cdot\bold{A} = div\bold{A} = \frac{1}{h_1h_2h_3}\left[ \frac{\partial}{\partial u_1}(h_2h_3A_1) + \frac{\partial}{\partial u_2}(h_3h_1A_2) + \frac{\partial}{\partial u_3}(h_1h_2A_3) \right] \displaystyle \nabla\cdot\bold{A} = div\bold{A} = \frac{1}{h_1h_2h_3}\left[ \frac{\partial}{\partial u_1}(h_2h_3A_1) + \frac{\partial}{\partial u_2}(h_3h_1A_2) + \frac{\partial}{\partial u_3}(h_1h_2A_3) \right]](https://s0.wp.com/latex.php?latex=%5Cdisplaystyle+%5Cnabla%5Ccdot%5Cbold%7BA%7D+%3D+div%5Cbold%7BA%7D+%3D+%5Cfrac%7B1%7D%7Bh_1h_2h_3%7D%5Cleft%5B+%5Cfrac%7B%5Cpartial%7D%7B%5Cpartial+u_1%7D%28h_2h_3A_1%29+%2B+%5Cfrac%7B%5Cpartial%7D%7B%5Cpartial+u_2%7D%28h_3h_1A_2%29+%2B+%5Cfrac%7B%5Cpartial%7D%7B%5Cpartial+u_3%7D%28h_1h_2A_3%29+%5Cright%5D&bg=T&fg=000000&s=0)

3.

4. ![\displaystyle \nabla^2\Phi = Laplacian\ of\ \Phi\\ = \frac{1}{h_1h_2h_3}\left[ \frac{\partial}{\partial u_1}\left( \frac{h_2h_3}{h_1}\frac{\partial\Phi}{\partial u_1} \right) + \frac{\partial}{\partial u_2}\left( \frac{h_3h_1}{h_2}\frac{\partial\Phi}{\partial u_2} \right) + \frac{\partial}{\partial u_3}\left( \frac{h_1h_2}{h_3}\frac{\partial\Phi}{\partial u_3} \right) \right] \displaystyle \nabla^2\Phi = Laplacian\ of\ \Phi\\ = \frac{1}{h_1h_2h_3}\left[ \frac{\partial}{\partial u_1}\left( \frac{h_2h_3}{h_1}\frac{\partial\Phi}{\partial u_1} \right) + \frac{\partial}{\partial u_2}\left( \frac{h_3h_1}{h_2}\frac{\partial\Phi}{\partial u_2} \right) + \frac{\partial}{\partial u_3}\left( \frac{h_1h_2}{h_3}\frac{\partial\Phi}{\partial u_3} \right) \right]](https://s0.wp.com/latex.php?latex=%5Cdisplaystyle+%5Cnabla%5E2%5CPhi+%3D+Laplacian%5C+of%5C+%5CPhi%5C%5C+++%3D+%5Cfrac%7B1%7D%7Bh_1h_2h_3%7D%5Cleft%5B+%5Cfrac%7B%5Cpartial%7D%7B%5Cpartial+u_1%7D%5Cleft%28+%5Cfrac%7Bh_2h_3%7D%7Bh_1%7D%5Cfrac%7B%5Cpartial%5CPhi%7D%7B%5Cpartial+u_1%7D+%5Cright%29+%2B+%5Cfrac%7B%5Cpartial%7D%7B%5Cpartial+u_2%7D%5Cleft%28+%5Cfrac%7Bh_3h_1%7D%7Bh_2%7D%5Cfrac%7B%5Cpartial%5CPhi%7D%7B%5Cpartial+u_2%7D+%5Cright%29+%2B+%5Cfrac%7B%5Cpartial%7D%7B%5Cpartial+u_3%7D%5Cleft%28+%5Cfrac%7Bh_1h_2%7D%7Bh_3%7D%5Cfrac%7B%5Cpartial%5CPhi%7D%7B%5Cpartial+u_3%7D+%5Cright%29+%5Cright%5D&bg=T&fg=000000&s=0)

仮に を

で置換すると,以下の場合,つまり

,

および

が

,

および

で置換され,

で置換されるような場合などには,これらの結果は直交座標系の通常の式に短縮されます.

Orthogonal curvilinear coordinates. Jacobians

The transformation equations

where we assume that

,

,

are continuous, have continuous partial derivatives and have a single-valued inverse establish a one to one correspondence between points in an

rectangular coordinate system. In vector notation the transformation (17) can be written

A point

can be defined not only by rectangular coordinates

but by coordinates

as well. We call

the curvilinear coordinates of the point.

If

and

are constant, then as

varies,

describes a curve which we call the

coordinate curve. Similarly we define the

and

coordinate curves through

.

From (18), we have

The vector

is tangent to the

coordinate curve at

. If

is a unit vector at

in this direction, we can write

where

. Similarly we can write

and

, where

and

respectively. Then (19) can be written

The quantities

,

,

are sometimes called scale factors.

If

,

,

are mutually perpendicular at any point

, the curvilinear coordinates are called orthogonal. In such case the element of arc length

is given by

and corresponds to the square of the length of the diagonal in the above parallelepiped.

Also, in the case of orthogonal coordinates the volume of the parallelepiped is given by

which can be written by

where

is called the Jacobian of the transformation.

It is clear that when the Jacobian is identically zero there is no parallelepiped. In such case there is a functional relationship between

,

and

, i.e. there is a function

such that

identically.

直交曲線座標とヤコビアン

以下の 変換式 は

ここで以下の前提を置きます.つまり ,

,

は連続であり,連続な偏微分を有し,直交座標系

と

との間で点が 1 対 1 に対応する単一の逆関数が確立しているとします.ベクトル記法においては変換式 (17) は以下のように書けます.

ある点 は 直交座標

だけで定義出来るだけでなく,座標

によっても定義できます.この

をその点の 曲線座標 と呼びます.

仮に および

が定数であり,

が変化すると,

は u1 座標曲線 と呼ばれる曲線を記述します.同様に

を通る

および

座標曲線を定義できます.

式 (18) から以下が得られます.

ベクトル は点

における

座標曲線へのタンジェントです.仮に

が点

におけるこの方向への単位ベクトルならこのように書けます,

と.ここで

です.同様に次のように書けます.

また

ここで,それぞれ

また

です.ゆえに式 (19) は以下のように書けます.

量 ,

,

は時に スケール因子 と呼びます.

仮に ,

,

が点

において相互に垂直とすると,その曲線座標を 直交 と呼びます.そのような場合,弧長の要素

は以下により与えられます.

また上記の直方体の対角線の長さの2乗に対応します.

また,直交座標においては直方体の体積は以下により与えられます.

ここで次のように記述できます.

ここで,

上記は変換式の ヤコビアン と呼ばれます.

ヤコビアンがゼロに等しい時に直方体が存在しないことは明らかです.そのような場合には ,

および

の間には関数関係が存在します.例えば

があって,

のような場合です.